Web Scraping without Getting Blocked: 5 Common Reasons You're Blocked and How to Avoid Them

Contents

Web scraping helps you extract large amounts of invaluable information from websites quickly and efficiently. By using web scraping or browser automation libraries like Beautiful Soup, Scrapy, Selenium, etc., you can gather the data that you need much more quickly compared to copying and pasting them manually from the websites. However, this also comes with some challenges.

The most common challenge is getting blocked from a website. Websites prohibit web scraping activities for several reasons such as to ensure data privacy, protect intellectual property, preserve server resources, and other security concerns.

Various types of techniques can be implemented to detect and prevent web scraping activities. To scrape data from a website successfully, it’s important to know which type of anti-scraping measures are used and why you're getting blocked. Here are some of the most common reasons and how you can avoid them:

Too Many Requests from Your IP Address

There’s a high probability that you’ll be blocked from a website if you’re making too many requests in a short amount of time. Websites can block requests coming from the same IP address if they are too frequent as this behavior is deemed to be suspicious. Once a certain pattern is detected or a threshold is passed, your requests will be blocked.

Solution 1: Rotate IP Address

If your IP address is blocked by a website, one solution is to rotate your IP address. IP rotation refers to switching your IP regularly between multiple addresses so that the website does not see your requests as suspicious. You can use a proxy/IP rotation service or proxy provider that supports IP rotation like IPRoyal, Froxy, and Proxyrack. You can even write an IP/proxy rotator yourself! Usually, a different IP address will be used for every new request. That said, you can also rotate your IP address based on different conditions, depending on your requirements.

Solution 2: Add Delays Between Requests

Besides security reasons, websites also implement rate limits on requests coming from the same IP address to protect server stability and ensure fair resource allocation for all users. Once you have hit the request threshold, the website will start rejecting or delaying your requests. Other than rotating your IP address, you can also incorporate delays between your requests to avoid overloading the website with an excessive number of requests. This makes your requests more human-like as humans don’t usually make requests at a fixed interval.

Your IP Address is Blacklisted

One possible reason for being blocked from a website even if you haven't made an excessive number of requests within a short time could be that your IP address has been blacklisted. That could be for several reasons:

- The website blocks IP ranges from certain countries and yours is in that range.

- Your IP address is associated with suspicious or malicious activities, such as hacking, spamming, or other unauthorized actions.

- If you are using a shared IP address, another user on the same IP might be engaging in malicious activities.

- Your IP address is mistakenly blacklisted due to technical errors, false positives, or misinterpretation of activity logs.

Solution: Use Proxies/VPNs

If your IP address is blocked because of the reasons above, try using a proxy server or VPN to mask your original IP address. As you do not make requests directly to the target website using your original IP address, you will be able to access it. However, one thing to note is that when you are using a proxy server, there’s a probability that it could be blocked too as some websites restrict access from proxy servers. To reduce the chances of your proxy getting blocked, use residential or mobile proxies as they are more similar to genuine connections made by humans.

🐻 Bear Tips: You can use a blacklist checker to check whether your IP address is blacklisted by your target website.

The User-Agent Header is Missing

To block requests that are made by robots, some websites check the requests’ user agent. The User-Agent header indicates which browser the request coming from is using and websites often use it to differentiate between a real human and a scraping bot. When you don't include the User-Agent header in your scraping requests, it can raise suspicion and trigger the website to block you.

Solution: Set a User-Agent in Your Request Header

To avoid being blocked due to the lack of the User-Agent header while scraping, you should include it in your requests. Different scraping/browser automation libraries have different ways to set one. Refer to the documentation and set one of these in the header:

- Chrome -

Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.5112.79 Safari/537.36 - Firefox -

Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:101.0) Gecko/20100101 Firefox/101.0 - Microsoft Edge -

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36 Edge/18.19582

To decrease the likelihood of getting blocked, it’s best to use browser agents that are popular and updated. This helps create the impression that the requests are coming from a genuine user. Besides that, it is also good to rotate between several different user agents so that the requests don’t cause suspicion.

🐻 Bear Tips: Here’s a list of other user agents you can use.

The Website Checks for Other Headers

Besides the User-Agent header, the website could also check for other headers. However, there are too many of them that could be checked. To help you identify the missing header, here are some common ones that you can start from:

- Referer - The Referer header indicates the URL of the current request’s origin. Some websites may analyze this header to verify that requests originate from their own pages or other reliable sources.

- Cookie - Websites may check the presence and validity of cookies to determine if the request is associated with an authenticated user session.

- Accept - The Accept header specifies the type of content that the client can handle. Websites may examine this header to ensure that the client accepts the expected content type, such as HTML, JSON, or XML.

Solution: Set Appropriate Headers in Your Requests

Identify the headers that the website expects and add them to your scraping requests. Make them more genuine by including headers like the ones listed above and other common ones such as Accept-Language, Accept-Encoding, Connection, etc. This helps to make them appear more like from a legitimate user.

The Website Implements CAPTCHA

Getting CAPTCHA is a common roadblock for web scraping. It is often implemented on websites to differentiate between human users and scraping bots as it requires user interaction to solve the puzzle. The task should be easy for a human but not for robots. Therefore, it can help websites to filter out scraping bots.

Solution: Use CAPTCHA Solving Services

Although the CAPTCHA puzzles are designed to filter out and block requests from robots, there are ways to overcome them. You can use a CAPTCHA-solving service like 2Captcha to solve the puzzle. It provides an API that allows you to add their CAPTCHA solving capability to your scraping bot and automate the process.

Other Tips to Scrape Websites Without Getting Blocked

There may be other reasons other than those listed above that could cause you to be blocked while web scraping. When you can’t pinpoint the reason, consider following some of the tips below:

Read robot.txt Before Scraping

The robots.txt file is used by websites to implement the Robots Exclusion Protocol and communicate instructions to web crawlers and bots about which parts of the site they are allowed to visit. Before scraping a website, check if it has a robots.txt file at /robot.txt. For example, the robot.txt file for www.abc.com should be found on www.abc.com/robot.txt.

If there is such a file on your target website, read and adhere to their guidelines so that you can minimize the probability of being blocked and scrape efficiently.

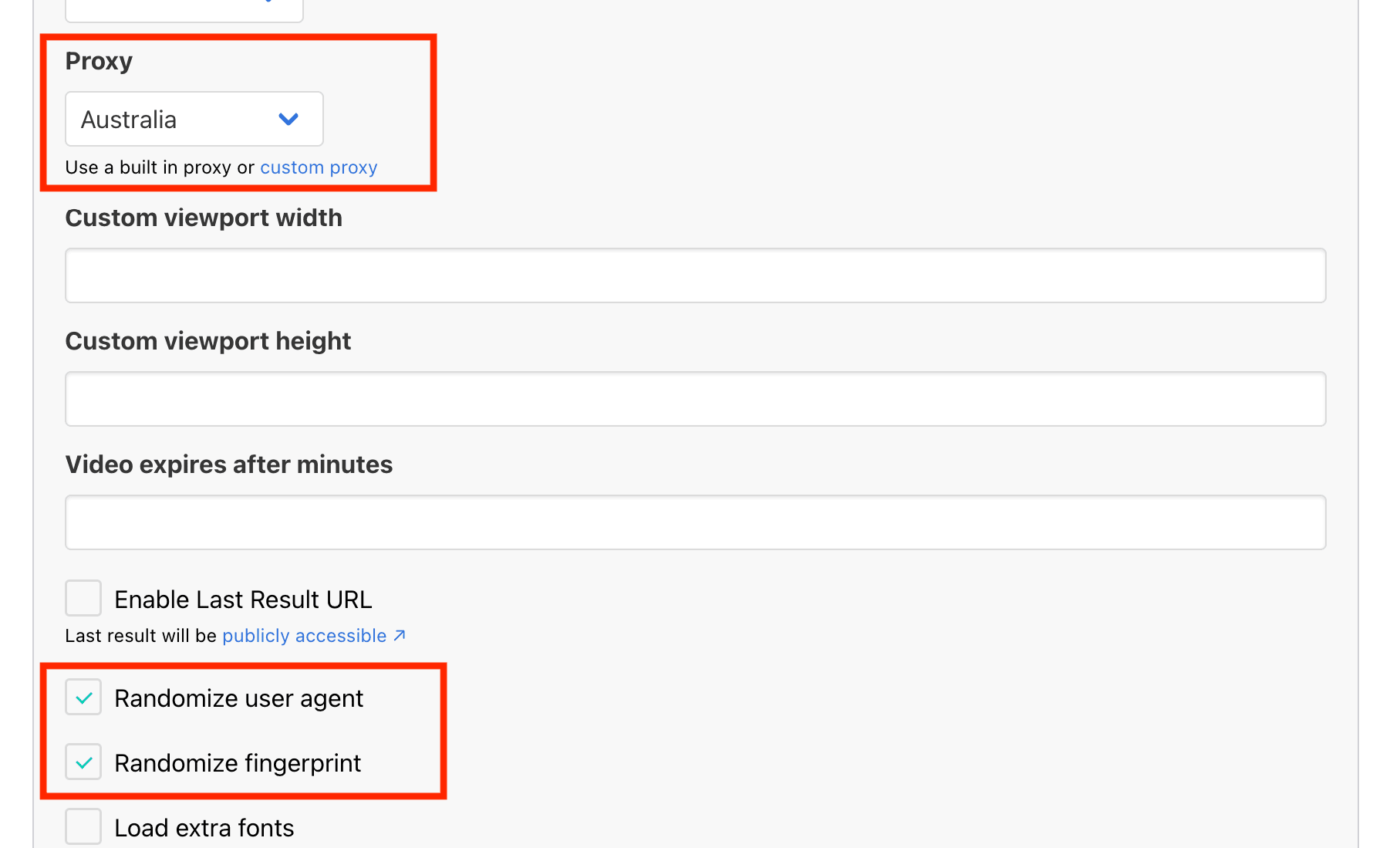

Randomize Browser Fingerprint

Similar to a human’s fingerprint, a browser fingerprint is unique and can be extracted from a web browser for identification. It is derived from various browser attributes, settings, and behaviors such as:

- Operating system

- Time zone

- Screen resolution and color depth

- Language and fonts

- Browser type, version, and extensions

- HTTP headers

- User-agent

Besides those listed above, many other properties are used to create a browser fingerprint. Therefore, it is almost impossible to have a duplicated fingerprint, hence can be used to identify a unique browser. By manipulating the values of some of the properties used, you will have different fingerprints when web scraping, making it less detectable as a scraping bot.

Use an API

Many websites offer APIs to access their data. Before scraping a website, check if they have an API to avoid wasting your time and effort. Otherwise, you can also consider using a third-party API like Browserbear that helps you to scrape a website with the methods above implemented:

P.S. Browserbear can also solve CAPTCHA automatically!

Conclusion

Web scraping is a useful method for extracting data from websites efficiently but it comes with its challenges. The rule of thumb to scrape websites without getting blocked is to make your scraping activities appear as human-like as possible. By applying these tips and methods, you should have a lower chance of being blocked. That said, websites can continually update their anti-scraping measures and you will have to update your web scraping method too if that happens.